I came across a paper today that I thought I might highlight here. It’s in Nature Geosciences, the authors are Francisco Estrada, Pierre Perron & Benjamin Martinez-Lopez, and the paper is called Statistically derived contributions of diverse human influences to twentieth-century temperature changes. The goal of the paper seems to be to try and actually attribute the observed warming to human activities. The abstract ends with

Our statistical analysis suggests that the reduction in the emissions of ozone-depleting substances under the Montreal Protocol, as well as a reduction in methane emissions, contributed to the lower rate of warming since the 1990s. Furthermore, we identify a contribution from the two world wars and the Great Depression to the documented cooling in the mid-twentieth century, through lower carbon dioxide emissions. We conclude that reductions in greenhouse gas emissions are effective in slowing the rate of warming in the short term.

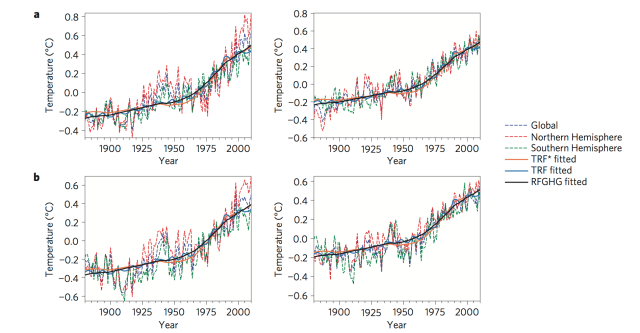

I haven’t read the paper in great detail, so was hoping that some regular commenters might have some thoughts. The main figure seems to be the one below which shows the surface temperature anomalies from NASA (left-hand panel) and HADCRUT4 (right-hand panel) with various fits to the temperature records based on different forcing approximations (RFGHG – greenhouse gases only; TRF* – all anthropogenic forcings; TRF – TRF* plus solar forcings).

Surface temperature from the NASA dataset with various fits based on different forcing approximations (Estrada et al. 2013).

What they claim is that there are various breaks in the temperature record (HADCRUT4 : Global and NH – 1956 & 1966; SH – 1909 & 1976. NASA : Global – 1956; NH – 1968; SH – 1923 & 1955). The paper then seems to go on to consider the evolution of various anthropogenic forcings, shown below. The various different anthropogenic emissions are CFCs, methane, carbon dioxide and anthropogenic aerosols. They then find various breaks in the anthropogenic forcings that seem similar to, but not quite the same as, some of the breaks in the surface temperature dataset. So, as far as I can tell, the claim is that these breaks in the anthropogenic forcings are statistically consistent with being associated with the breaks in the surface temperature dataset. It does talk about other factors, such as the Atlantic Meridional Oscillation, so I don’t think the paper is claiming that changes in the anthropogenic forcings, alone, were responsible for changes in the surface temperature dataset.

The paper also discusses the recent slowdown and says

The causes of a slow-down in warming since the mid-1990s have been a subject of interest. Some proposed the joint effect of increased short-lived sulphur emissions, La Nina events and the eleven-year solar cycle as offsetting the effect of rising greenhouse gas concentrations. We show that the effects of the Montreal Protocol and of changes in agricultural practices in Asia have been large enough to change the long-run path of radiative forcing. Tropospheric aerosols contributed to making this slowdown more pronounced.

So, one of the conclusions of the paper seems to be that this shows that our policies can have an measurable impact on warming, even on relatively short timescales. Additionally, the final comment of the paper is

Paradoxically the recent decrease in warming, presented by global warming sceptics as proof that humankind cannot affect the climate system, is shown to have a direct human origin.

So, I can’t really tell if this is an interesting and valuable piece of work, a bit of statistical trickery that’s trying to identify anthropogenic influences in what is clearly a quite complicated dataset, a combination of the two, or something else entirely. I’m not even sure if I’ve summarised it particularly well, so any comments from those who know more than me would be most welcome. Given that it seems to be suggesting an important role for anthropogenic aerosols in producing the recent slowdown, I thought (hoped :-)) Karsten might have some thoughts.

See also this, on recent Cowtan and Way paper., i.e., this., discussed at RC.

They argue that the “hiatus” is substantially an artefact of coverage bias.

John, yes I’m aware of that paper. I don’t think it completely removes the slowdown. The 30-year trend is around 0.17 degrees per decade. Cowtan & Way’s analysis suggest that the trend since 1998 is probably around 0.1 degrees per decade (I realise that I’m ignoring errors here). So, it’s greater than the un-adjusted datasets would indicate but still below the long-term trend and, I think, there are still likely some anthropogenic influences (aerosols for example) that have contributed to this slowdown. Then again, I could be wrong 🙂

John, I’ve just read the RC post and that does seem to indicate that the C&W trend is about the same as the trend since 1951. I hadn’t realised that. I suspect there is still some cooling influence from anthropogenic aerosols, but maybe not as much as I’d realised.

The Cowtan & Way trend since 1998 is 0.115 ±0.166 °C/decade (2σ) (see the updated SkS Trend Calculator, maintained by Kevin Cowtan). There is no way to conclude a hiatus from that, not with any statistical significance. There is simply not enough data to reject the 30-year trend.

[Note for other readers, as I expect Wotts is aware of this] Most of the apparent short-term trends are simply due to the 1998 El Nino and more recent La Ninas, short term variations around the trend. Keep in mind that climate temperatures display considerable auto-correlation, and that strings of hot or cold years are only to be expected. Only when you look at enough data to separate the longer term trend and the null hypothesis of no warming can you conclude anything – and 1998-present is not enough data. Not to be pushy, but this is an issue I’ve gone around on repeatedly with ‘skeptics’ on several blogs.

Thanks, KR. I hadn’t realised the the SkS trend calculator had been updated. I agree with the latter part of your comment and it is well made. Considering the period since 1998 isn’t really enough to make any strong statements about the long-term trend.

I can’t see how one can make informed statements about air temperatures, statements which infer a direct relationship to greenhouse forcings, without an analysis of the stoichiometry of the heat involved. In other words, are reduced air temperatures the result of reduced greenhouse forcing, or simply an indicator of a temporary redistribution of incremental heat? 98% of AGW forced heat calories are being ignored if one only looks at surface air temperatures. And what do we know about the temperature record of deeper ocean strata in the second half of the twentieth century?

Roger, that was one of the issues that I had with the paper. I haven’t read it in great detail and they do talk about natural variation, but I agree that the surface temperatures are only associated with a few percent of the excess energy, so we might not expect to see such a strong link between abrupt changes in the forcings and abrupt changes in the surface temperature. Having said that, maybe what they see is reasonable. Just because the surface temperature is associated with only a few percent of the excess energy, maybe that is enough so that abrupt changes in the anthropogenic forcings will have a noticeable effect on the surface temperature record. Of course, if the Cowtan & Way analysis suggests that the slowdown isn’t actually “real” then that would seem to somewhat undermine some of the analysis here.

1) When I was growing up near Pittsburgh, PA, if you went downtown, the sun was rarely seen, given the pollution and it seemed cooler there than North of town far enough. When the steel business collapsed, the effect seemed to go away. It would be astonishing if the huge rise in Chinese industry over last 2 decades had zero effect. When I I first visited Beijing in the later 1980s, it was mostly bicycles and the sky was rather clearer than it was the last time ~2005.

2) But as others have said, there easily may well not be enough data. A fellow who had some influence in statistics thinking where II first worked has some pithy quotes, of which this is especially apropos:

“The combination of some data and an aching desire for an answer does not ensure that a reasonable answer can be extracted from a given body of data. ”

About the best one can do with some of these datasets is bound uncertainty, but the bounds may be large. These attribution problems are tough, and the most useful effect of some papers is to highlight areas where we had more data. On occasion, that has stirred people to reanalyze data they already had in different ways, often seen in paleoclimate research, where time machines are unavailable to deliver modern instrumentation into the past.

Interesting, thanks John. I’ve been thinking a little along these lines myself recently. I can’t claim to have developed any saying as apt as your colleagues.

One issue I have is that some (who’s names you could probably guess) want to fixate on statistics above all else. They insist on either rejecting or accepting the null or, I guess, basing everything on p-values. One issue here is that the null isn’t always as obviousy trivial in some situations as many might think (you don’t actually have a true control as one might have in medical tests). Also, you also have actual theory that can indicate whether your trend (even if not statistically significant) might at least be consistent with what you’d expect based on the scientific modelling/theory.

The other issue I have is related to Type I and Type II errors. Just because you can’t reject the null of there being “no trend”, it doesn’t mean that there isn’t a trend. This I believe is called a type II error (a type I error being a false positive). I was reading something recently that was making the case that scientifically one might want to avoid type I errors rather than type II errors. In risk management, however, one might be willing to risk a type I error rather than a type II error (err on the side of caution one might say). It’s clearly not obvious which is the appropriate thing to consider, but it seems that even this basic discussion is impossible to have with some. Once they’ve decided that the null can’t be rejected, you’re no longer allowed to suggest that the possible trend might be something to consider.

Well, I wouldn’t presume to call John Tukey a colleague :-)but we know he was there 🙂 (Bell Labs had internal peer review for proposed external papers. If you wrote a paper, it would go up your management chain to Executive Director (likely to have 500-1000 people), across to 2 other E.D.s, down their chains for review, back up, over, and down. Statistical things would likely get sent to Tukey or others in that center. People knew to go ask first, since getting a “this is junk” back down the chain was not good.)

You might take a look at Andrew Gelman’s blog, as he often discusses such issues.

Wotts, the HadCRUT4 trend since 1950 is 0.107 +/- 0.02 C/decade. From 1965 it is 0.151 +/- 0.026 C/decade. From 1979 it is 0.155 +/- 0.042 C/decade. From 1979, the HadCRUT5 hybrid trend is 0.172 +/- 0.051 C/decade.

In a way, Real Climate pulls a swifty in that comparisons for the “pause” are normally for the period of rapid temperature rise since 1975. I have used 1965 rather than 1975 for the intermediate comparitor because that is closer to the inflection point for the forcings, and 1975 was the last year of a very large two year La Nina after which we entered a period dominated by El Ninos – factors which are likely to have influenced the trend. For what it is worth, the HadCRUT4 trend from 1975 is 0.168 C/decade. The HadCRUT4 hybrid, of course, has no values prior to 1979.

I say “in a way” because it could be argued that the trend since 1950 better characterizes the forced trend in global temperatures, with the larger trends since the mid-1960s being the result of natural variation. If we did argue that, however, the forced trend is even less than that predicted by climate models than is currently the case. Further, that creates a significant discrepancy between the Foster and Rahmstorf corrected trends and the “forced trends”, as also between the Neilsen-Gammon ENSO grouped trends and the forced trends. As the Reacl CLimate blog on Cowtan and Wray was by Stefan Rahmstorf, I do not think he would accept that F&R was so significantly flawed. Consequently, though arguable, I don’t think Stefan would argue that the recent forced trend is as low as the trend since 1950, and hence the comparison of Cowtan and Wray with the 1950 trend is a bit of a fudge.

Wotts @7:26 pm, the “some” you mention are not even consistent. The correct conclusion if a short trend is not statistically distinguishable from a longer trend which includes it is that the longer trend has not changed. Ego, based on best evidence, the current trend in global temperatures is around 0.17 C per decade, with the “pause” being to short to reject that hypothesis. If, absurdly, you conclude that a short term trend, which includes both zero and the long term trend in its confidence range is a zero trend, then all long term trends must be made of overlapping intervals with zero trend – which is absurd.

I do, indeed, have some thoughts. Interesting paper, BUT, unfortunately, they messed it up in a pretty bad way! As so many others, they get the aerosol effects terribly wrong. On top of that, they are unaware of the longer term volcanic impacts. No surprise they fall for Tung and Zhou in that they filter the AMO *ouch*. While their method can’t detect effects of volcanic eruptions (because of its short-term surface temperature effect), the volcanic signal is in principle detectable in the ocean for many decades. This is particularly true in a fairly radiatively balanced state of the system and with a sequence of volcanic eruptions as it was the case in the late 19th/early 20th century. Very likely the trigger for the first AMO slowdown. Different picture for the second AMO slowdown past WWII. This time it’s anthropogenic sulfur aerosols. Problem: They used the GISS forcing for aerosols which is entirely at odds with AR5 and every known aerosol emission inventory. I don’t consider it to be credible anymore. I have to admit, however, that I haven’t bothered to ask the GISS people yet as to why they still keep it online (and how they came up with it in the first place). In any case, as a result (in the paper in question) the indirect aerosol effect follows an almost a straight (decreasing) line over the past century as indicated in Fig.4d. That’s simply wrong and the main reason why their whole statistical edifice is build on extremely shaky ground. Shaky, because what they attribute to AMO is in fact aerosols and volcanoes to a large extent. How this could possibly provide deeper insight into human induced temperature variability is beyond me.

Ironically, their conclusion that our policies can have an measurable impact on warming holds, albeit not exactly the way they think. Aerosols most likely had a much larger impact than CFC’s could ever have.

To their credit, I’m quite sure they simply didn’t know better. They are probably good statisticians, but show considerable gaps when it comes to the physical background. It’s the more striking as they’ve actually tested how sensitive their method is to the application of the (much more credible) “Meinshausen” aerosol direct forcing (SI Fig 9 and chapter S7). Still, they didn’t make the important step to apply the same temporal evolution for the indirect aerosol forcing and to repeat the sensitivity analysis. I suspect that it might have led them to the some different conclusions. As I said, no wilful omission rather than limited knowledge about recent developments in the literature outside their field of expertise. Given that the paper was accepted shortly after the publication of AR5, a bit more care should have been taken. But then, it doesn’t come as surprise anymore. The aerosol community has still a very very long way to go in “selling the product” 😉

Thank for the info of the paper, could be interesting. The statistical analysis of complex systems always gives possibilities for some speculations about the causes and effects seen in the system. The lack of publications of this sort of work has been one reason to doubt (or rather, slight lack of trust of) some of the work with the models, though there probably has been some preparing the articles of model results (I think there was some paper by people at GISS at least (‘timing of glacial cycles via forcings?), which have been cross-checked with roust statistics.

This paper’s paywalled, thus cannot judge the influence of the article (if it’s written clearly enough). But your description sounds like the authors are trying to get nearer to reality with the variables involved in climate system, unlike some previous work (most of those using AMO, PDO as predictors of the whole system of climate). Working with the various indices generated from directly from observations can easily lead to errors in scale like R.Lambert says. Another problem always in multivariate statistics is the way an unknown relationship is excluded/included. This is not such a problem with climate data, as in other sciences, but still, there are (or may be) some discontinuities in the behavior of some. The change in the response function over time is a problem (f.e. when exactly does a glacier calve?, does it rather crumble in place?)). In Ecology, I think the standard way is to assume nature as a monolithic unchanging system, so the multivariate analysis is ok, but say a mutation occurs which allows a pine beetle to generate 2 generations per summer, the exact time of invasion of this strain on an area cannot be predicted with studies done on the earlier strain. But yes, this is at least to me an interesting paper. Quote on statistical models:”If you can’t find the error in a statistical model, you aren’t looking hard enough, though there’s a possibility it MIGHT be correct”

I had a look at the link to RC that John Mashey provided – thanks. UK residents: there is something intriguing in the posted responses, Comment 12 – surely not who it appears to be? The attitude is consistent with some of his publicly expressed opinions.

jyyh, no problem. Glad you found it useful.

Tom, thanks for the comments. I agree with your second one about the null. Those who claim that the null has to be a zero trend miss that they need evidence that this is an appropriate null. In the case of the surface temperatures, the long-term trend may well be a more appropriate null. It’s interesting, in a sense, that not many have made this case in the past. I think I’ve seen it mentioned somewhere, but it hasn’t been argued very often.

Karsten, thanks. Very useful. I suspected you’d have some thoughts in this one 🙂

Hi Wotts,

Writing from the perspective of economist/econometrician, the interesting contribution of the paper was indeed the statistical approach taken. In particular, the question of whether the relevant time series data (global temps, radiative forcing, etc) are a) non-stationary or b) trend-stationary with one or more structural breaks. Previous studies have largely advanced the former, while Estrada et al. are claiming the latter.

I’m not sure how familiar you are with this sort of thing, but non-stationarity is one of the fundamental issues facing any statistical analysis of time series data. In short, non-stationary data exhibit a stochastic trend where the mean, variance and/or covariance are changing over time. (E.g. A random walk) The problem is that non-stationary series are inherently predisposed to yielding spurious regression results, indicating the presence of meaningful relationships even where there are none.

Given that a vast array of economic data are non-stationary (GDP, money supply, etc, etc), econometricians have fixated on this issue for several decades and have developed several approaches to overcoming the problem. Probably the best known is the cointegration framework developed by Engle and Granger. The short version is that if you can show that two non-stationary series are “cointegrated”, then you can use them in a regression framework without fear of obtaining spurious results. (Basically, the series share the same stochastic trend so that these “cancel out”.) Unsurprisingly, this cointegration framework has been the departure point for those previous (statistical-based) studies that have argued climate time series data are non-stationary. See here and here, for example.

Estrada et al, however, are arguing that this cointegration approach is inappropriate. Instead by and building on their previous work, they claim that climate data are actually trend stationary (i.e. rising around a deterministic time trend), with several structural breaks. That is, the slope of this trend changes at key points due to exogenous shocks, such as a drop in CFCs due to the Montreal Protocol in the early 1990s.

Part of the problem in evaluating the competing claims of these two groups — cointegration versus non-stationary with structural breaks — is that different statistical tests give you different results. There is no final arbiter to help make a definitive call, so you ultimately have to decide who presents the most compelling case on balance. (Welcome to the murky waters of time series econometrics!) I have to re-read this latest Estrada et al. paper to digest how strong their arguments are, but is an interesting viewpoint at least.

Grant, thanks. That’s an example of a comment I’m going to have to think about for a while 🙂 I shall have to read some of the papers that you link to, but that may have to wait a while.

I presume the main issue here is related to attribution. From a physics perspective we can explain the surface temperature increase as being a result of radiative forcings (mainly anthropogenic). That, however, doesn’t actually prove that this is the case, it just provides a plausible, physically motivated, explanation as to why the surface temperatures have risen. This work here, and the other work you’ve mentioned, is an attempt to actually attribute the surface temperature rise to anthropogenic influences.

Hi Wotts. Attribution is precisely the issue.

Using empirical time-series methods comes completely natural to us economists. I had initially assumed that this was also the predominant basis for attributing climate change. It took me a while to appreciate how limited statistical-based approaches actually were (are?) in the climate literature. (As you know, climate scientists typically rely on simulations, GCMs and optimal fingerprinting techniques.)

I believe that this was largely due to the issue of non-stationarity that I mentioned above. Climate scientists understood that this was a problem in their early detection and attribution efforts, and so developed their models as a way of exogenously simulating climate effects.[*] Around the same time, econometricians developed approaches (like cointegration-based methods) to overcome similar problems in macroeconomic data. However, that boat had already sailed at that point and so climate scientists stuck almost exclusively with with GCMs.

Nevertheless, things are changing as more outsiders (including economists, obviously) have become interested in better understanding the effects and drivers of climate change. The IPCC AR5 has a pretty decent summary of time-series approaches, including several of the studies that I mentioned above: See Section 10.2.2.

___

[*] “In addition, rigorous statistical tools do not exist to show whether relationships between statistically non-stationary data of this kind are truly statistically significant[…] Thus a physical model that includes both the hypothesized forcing and enhanced greenhouse forcing must be used to make progress.” – Folland et al. (1992, p. 163)

PS – Of course, data limitations regarding specific GHGs were also a big problem for attributing CC using time-series approaches in those early days.

Thanks, Grant. The interesting issue for me as a physicist, is that I would imagine considering the problem from the other perspective. We have a good understanding of the radiative influence of CO2 and water vapour (and how water vapour concentrations change with atmospheric temperature). So, one can then determine, through a physically motivated model, what should happen were atmospheric CO2 concentrations to rise. There are complications. One needs to understand how the excess energy is partitioned between the different components of the climate (land, atmosphere, polar ice, oceans), but one can develop models and get, at least globally, quite a robust idea of what is likely.

It seems, however, that some are suggesting that we still haven’t been able to show that changes can definitely be attributed to humans. I can see how this is an important bit of extra evidence, but if it isn’t us, much of basic physics would have to be wrong.

Of course, if we could attribute it to humans, that would certainly make everything much more convincing but not being able to do so wouldn’t seem like particularly good reason for considering that it may not be anthropogenic influences that have driven the changes.

It’s been a long time since my stochastic processes course, and I’ve never done econometrics, although I’ve read some … but physics has really powerful conservation laws, like conservation of energy. It is very hard for real random walks to persist very long. The surface temperature may jiggle around a lot, but it cannot get very far from the tether of Ocean Heat Content. See NASA GISS Data. here are temperature anomallies and difference from year to year:

1996 0.33 -0.10

1997 0.46 0.13

1998 0.62 0.16

1999 0.41 -0.21

2000 0.41 0.00

So, big El Nino in 1998. What were the chances that 1999 would have been up another 0.16?

~0.

Likewise, not only is the surface temperature absolute value tethered to the Ocean Heat Content, but the possible rates of change are bounded by physical contraints. For instance, it now looked like the unique-within-last 1000-years 9-10ppm drop of CO2 into 1600AD was at least partially caused by a 50Mperson die-off in the Americas. Any purely-statistical analysis of 1000AD-1750AD could easily draw poor conclusions.

@Wotts

I completely agree that potential problems with statistical approaches to climate attribution are not a compelling case against against the theory of AGW. At the same time, complex sciences like climate change rely on multiple lines of evidence, so it certainly doesn’t hurt to further this particular line of research. I’d also suggest that most educated layman are more familiar with statistical-based approaches, so it would possibly help to get them on board. Similarly, there is some scepticism of GCMs, which have a sense of black-boxiness about them.

@John Mashey

John, recall that arguments for a stochastic trend in temperature stem from the fact that it would be imparted by the various radiative forcings… which are themselves the exogenous function of non-stationary processes like economic activity. It could further be argued that the physical nature of greenhouse processes help to propagate such a stochastic trend — for example, the long-lived nature of GHGs in the atmosphere. See Sections 4.1 and 4.2 of this paper for a more detailed explanation.

Grant,

I certainly wasn’t implying that doing these statistical analyses have no value and certainly getting them on board would seem worthwhile. As we’ve both already indicated though, I can see that there is a slight asymmetry. Being able to attribute the changes to human influence would be a very useful extra stream of evidence. Not being able to do so, however, doesn’t imply the reverse.

@Grant McDermott

I’m not quite sure I understand your comment., but maybe I wasn’t explicit enough in my post.

1) I absolutely think there is a strong stochastic element in year-by-year temperature time series, but better modeled by something like ARIMA/ARFIMA, where the moving average is driven long term by Earth’s energy balance (mostly Greenhouse Gases and aerosols, with land-use/albedo changes) and the noise is a mixture of cycles like solar+ocean oscillations, other aperiodic state changes like ENSO, and completely unpredictable major volcanoes. Of course, climate models are better yet, since based on physics. The year-to-year jiggles easily swamp the year-to-year change in the average until you look at long-enough periods. None of the climate scientists I know claim that temperature is year-to-year deterministic.

People have often done statistical analyses in attribution studies to characterize the natural variability, so my comment about the CO2 drop into 1600AD was cautionary. If one does a statistical analysis of the CO2 jiggles of 1AD to 1750AD, the results would tell you that CO2 can “naturally” jiggle at certain rates, but in fact, there is a pretty good correlation of drops with major plagues of the sort that caused farmland to turn back into forest, with the 1600AD being the most obvious.

2) I just don’t believe random-walk is useful for this. I don’t think you think that, given citation of the papers, but I have seen it claimed (Google: random walk climate), so I wondered.

It;’s been discussed otten, here,

I won’t think we disagree unless you think random-walk is a good way to model this.

Grant McDermott – I have to consider claim that radiative forcing is a non-stationary process to be a basic epistemological error, and cointegration wholly inappropriate for climate data.

Milankovitch forcings are simply orbital mechanics, the solar cycle and volcanic activity physics where we don’t see all the mechanics (hidden variables), and anthropogenic forcings are the results of human decisions based upon available information at any time, not a random walk. Forcings are a non-random input.

And when considering the relationship of those forcings to climate response, you have to be very careful about your tests – unit root tests like the Augmented Dickey Fuller (ADF), such as used in Beenstock et al 2012, are commonly _misused_ . The ADF, for example, is a (fairly weak) test for unit roots with potential underlying linear trends or bias. And Beenstock et al use it over time periods of climate and forcings that are distinctly non-linear, invalidating that test,

Tamino discusses this issue here and continued

here, noting that the appropriate unit root test is a Covariant ADF (CADF), testing whether temperatures are nonstationary or covariant wrt forcings. The unit root, and hence non-stationary behavior, is flatly rejected with the tests appropriate to the data. I’ll note that it’s also solidly rejected when examining linear sections of the forcings and climate with the ADF, where it is appropriate. This is not at all surprising – conservation of energy in and of itself is an incredibly strong trend-stationary influence.

The (mis)use of economic theories on physics problems, divorced from that physics, results in errors such as Beenstock stating that:

“…temperature varies with solar irradiance and it varies directly with the change in rfCO2 and rfN2O and inversely with the change in rfCH4.” (emphasis added)

If a statistical analysis concludes such wholly unphysical results as an increase in a major GHG cooling the climate, the errors in that analysis completely invalidate it. If you wish to examine cause/effect relationships in a physical system, you need to consider those physics.

The best simple analogy I’ve seen is the minute-long cartoon of man (trend) walking his dog (noise).

In a prequel to his contributions to #ClimateBall, Vaughan Pratt had to deal with the non-stationarity argument on an old Amazon thread:

http://www.amazon.com/tag/science/forum/ref=cm_cd_pg_pg$1?_encoding=UTF8&cdPage=$1&cdSort=oldest&cdThread=Tx3TXP04WUSD4R1

After these 22 pages or so of comments, there’s also VS’s thread at Bart’s.

***

Basically, the argument amounts to this: either climate behaves the way we think it does, or climate is non-stationary.

Choosing between the red and the blue pill sounds easier.

I think the simplest summary I’ve seen so far is this: temperature is a physical quantity, and hence bounded by basic energy considerations. A random (non-stationary) series is by definition unbounded, as there are no constraints on value. A does not equal B…

Quite frankly, claims that climate is a random process are just another (unsupportable) version of the it’s not us denial myth.

Pingback: Another Week of Global Warming News, November 24, 2013 – A Few Things Ill Considered